Este poderoso método de aprendizaje autosupervisado

Imagine a world where computer vision models could learn from any set of images without relying on labels or fine-tuning. Sounds futuristic, right? Well, Meta AI has made that dream a reality with its latest innovation, DINOv2. This powerful self-supervised learning method is poised to transform the way businesses use computer vision in various applications, from e-commerce to manufacturing and beyond. In this blog post, we’ll explore what DINOv2 is, how it works, and the exciting possibilities it opens up for businesses. So, buckle up, and let’s dive in!

Understanding DINOv2

DINOv2 is a cutting-edge method for training computer vision models using self-supervised learning. It allows the model to learn from any collection of images without needing labels or metadata. Unlike traditional image-text pretraining methods, which rely on captions to learn about an image’s content, DINOv2 is based on self-supervised learning, meaning it doesn’t rely on text descriptions. DINOv2 learns to predict the relationship between different parts of an image, which helps it to understand and represent the underlying structure of the image. This enables the model to learn more in-depth information about images, such as spatial relationships and depth estimation.

But what does this mean for businesses? In simple terms, DINOv2 can make computer vision applications more accurate, efficient, and versatile.

Business Impact

DINOv2 matters for businesses because it provides a powerful and flexible way to train computer vision models without requiring large amounts of labeled data. This means that businesses can more easily and cost-effectively develop computer vision applications for various use cases, such as object recognition, image classification, and segmentation. By using self-supervision, DINOv2 can learn from any collection of images, making it suitable for use in a wide range of applications, even in specialized fields where images are difficult or impossible to label. Additionally, DINOv2’s strong performance and flexibility make it suitable for use as a backbone for many different computer vision tasks, reducing the need for businesses to develop and train separate models for each task. This can save time and resources and enable businesses to develop more advanced and sophisticated computer vision applications.

Challenges relying on captions

Over the years, image-text pre-training has been the go-to approach for various computer vision tasks. However, the approach relies solely on written captions to learn the meaning behind an image, overlooking significant details that are not mentioned in the text description. For instance, a caption of a picture featuring a chair in a spacious blue room may only mention “single oak chair,” but this disregards crucial information such as the chair’s location in the room, the presence of a wall clock, and the overall room décor.

Potential Real-World applications of DINOv2

To better understand the impact of DINOv2, let’s explore some real-world examples where DINOv2 could be used:

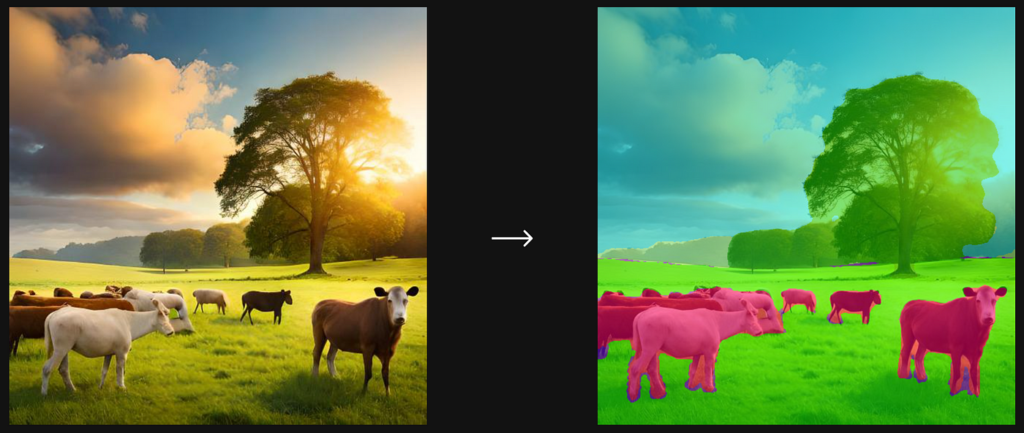

1. Object Identification

One good business example of object recognition in livestock counting could be a large-scale livestock farming operation. By utilizing object recognition technology, such a company could automate the process of livestock counting, which would save them a significant amount of time and money. The technology could accurately detect and count each animal, and the data could be used for various purposes such as inventory management, herd health monitoring, and identifying animals for medical treatment.

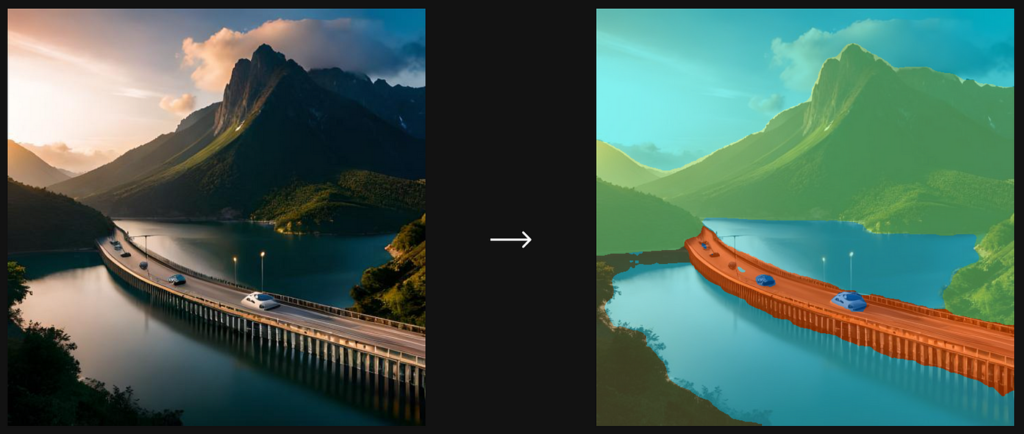

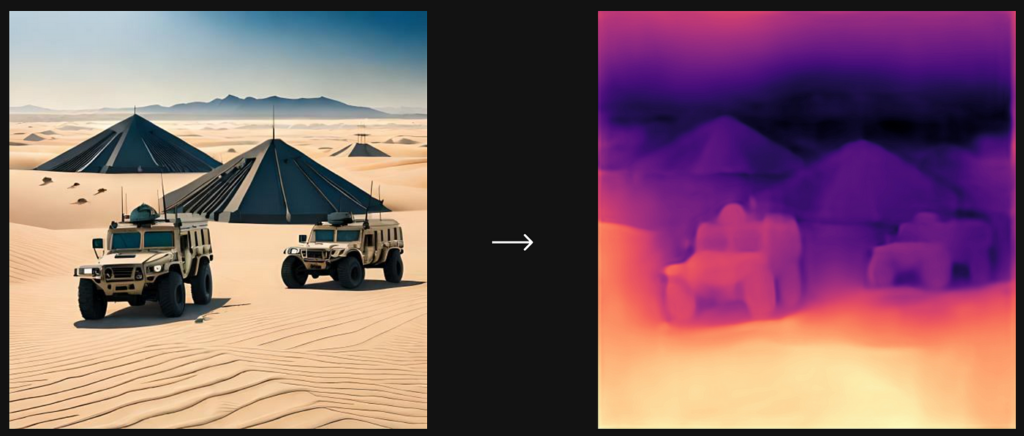

2. Depth measurement

For instance, security cameras monitoring a large area could use distance estimates using per-pixel depth to quickly identify and differentiate objects at different distances, such as individuals or vehicles that are closer or farther away. This could help security personnel quickly assess potential threats and respond accordingly.

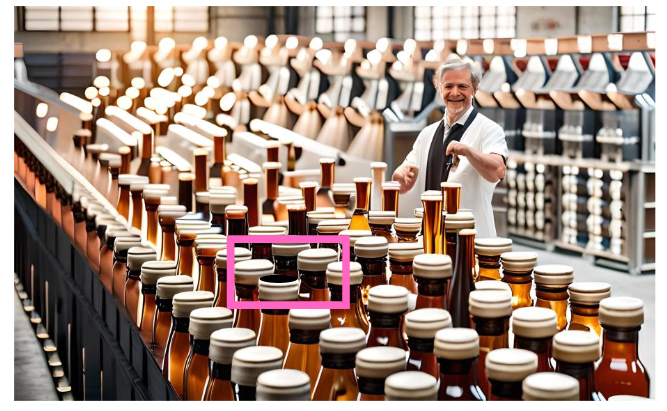

3. Object Classification

One potential business use case for image object classification in manufacturing could be to automatically detect and classify defects in products during the production process. The system could automatically identify and flag any defective products for inspection or removal from the production line. This could improve product quality control and reduce the risk of defective products reaching customers.

4. Object retrieval

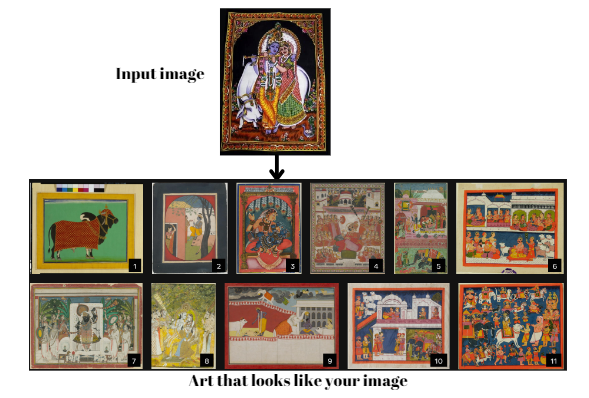

Imagine you have a large digital collection of art/paintings, and you want to find paintings in your collection that are similar to a particular painting. Using image retrieval, you can input the image of the painting you want to find similar pieces to, and the algorithm will use the frozen features from the painting to search through your collection and find other paintings with similar features. This approach allows you to efficiently find other Tanjore paintings that have similar styles or color schemes without manually searching through your entire collection.

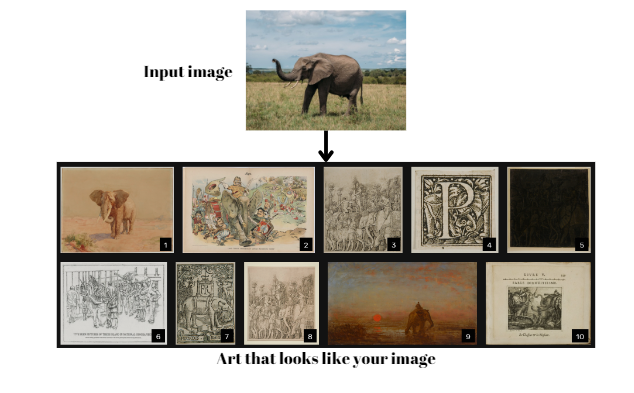

Another example of image retrieval is where frozen features are used to find similar images of elephants from a large image collection. This technique involves encoding the images into a set of numerical values (features) and then comparing these features to those of other images to find similarities. This approach can be used in various applications, such as in the field of art to find similar art pieces or in the field of wildlife conservation to identify and track individual animals.

Image retrieval has several practical uses in various fields like e-commerce, medical imaging, art and cultural preservation, and advertising. In the medical domain, for instance, doctors and researchers can use image retrieval to search for comparable medical images, like MRI scans or X-rays. By comparing images with a database of similar ones, this approach can aid in the diagnosis of rare or complicated medical cases.

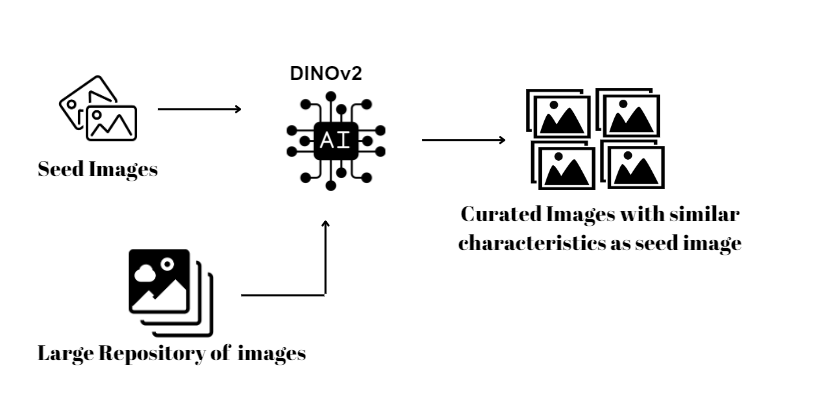

5. Image Data Curation

In the medical field, obtaining a sufficient volume of high-quality image data can be challenging. A researcher studying specific tumor patterns would benefit from the ability to input a seed image and search through a public dataset of pathology images to eliminate irrelevant images and balance the dataset across concepts.

For example, the authors of the paper curated a set of seed images and retrieved images that closely matched those seeds to create a pretraining dataset of 142 million images from a source pool of 1.2 billion images for their own study.

Computer vision + Generative AI

As the field of computer vision continues to evolve, DINOv2 is expected to play a significant role in shaping its future. Imagine being able to integrate DINOv2 with large language models to develop more complex AI systems that can reason about images in a deeper way than just describing them with a single text sentence. This integration will enable businesses to leverage the power of both visual and textual understanding in their AI applications. The future of computer vision + LLM will likely witness more advancements, use cases, and collaborations, which will bring a new era of computer vision applications to businesses worldwide.

One example of using computer vision and generative AI together in manufacturing is to generate new design variations for a product based on images of existing designs. Computer vision algorithms can be used to extract key features of the product from images, and then generative AI can be used to create new design variations by altering these features in a controlled manner. This approach can help manufacturers to explore a wider range of design options and potentially identify new and innovative product designs.

Conclusion

In conclusion, DINOv2 is a powerful pre-training method for computer vision models that don’t require any fine-tuning. With its unique approach to learning visual representations from large, uncurated datasets, it has shown promising results in a variety of applications, from image classification to object detection. Its ability to perform well even with limited labeled data makes it a valuable tool for businesses looking to incorporate computer vision into their operations. As research into DINOv2 and its capabilities continues, we can expect to see more innovative applications of this technology in the future. With the combination of computer vision and generative AI, we can expect to see a significant transformation in various industries, including healthcare, manufacturing, and agriculture.

Fuente: https://towardsai.net