Un equipo de ingenieros de la Universidad Rice ha desarrollado un sistema que aprovecha las herramientas de las condiciones de las carreteras durante las inundaciones que puede facilitar una respuesta de emergencia segura y eficiente, reducir las muertes relacionadas con vehículos y mejorar la resiliencia de las comunidades.

Abstract

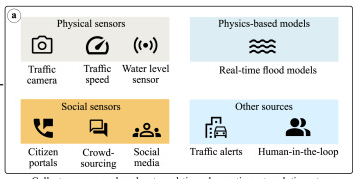

Reliable sensing of road conditions during flooding can facilitate safe and efficient emergency response, reduce vehicle-related fatalities, and enhance community resilience. Existing situational awareness tools typically depend on limited data sources or simplified models, rendering them inadequate for sensing dynamically evolving roadway conditions. Consequently, roadway-related incidents are a leading cause of flood fatalities (40%–60%) in many developed countries. While an extensive network of physical sensors could improve situational awareness, they are expensive to operate at scale. This study proposes an alternative—a framework that leverages existing data sources, including physical, social, and visual sensors and physics-based models, to sense road conditions. It uses source-specific data collection and processing, data fusion and augmentation, and network and spatial analyses workflows to infer flood impacts at link and network levels. A limited case study application of the framework in Houston, Texas, indicates that repurposing existing data sources can improve roadway situational awareness. This framework offers a paradigm shift for improving mobility-centric situational awareness using open-source tools, existing data sources, and modern algorithms, thus offering a practical solution for communities. The paper’s contributions are timely: it provides an equitable framework to improve situational awareness in an epoch of climate change and exacerbating urban flood risk.

Introduction

Flooding poses a significant risk to urban mobility: While inundated roadways and overtopped bridges isolate communities and limit roadway mobility, the paucity of reliable real-time road condition data causes delays and detours, reduces emergency response efficiency, and poses safety risks [1], [2], [3], [4], [5], [6], [7], [8], [9], [10]. Further, existing situational awareness tools are often limited in their ability to accurately sense dynamically evolving road conditions [11], [12], thus limiting communities’ ability to respond to flood events. Consequently, mobility-related incidents are linked to 40%–60% of flood fatalities in many developed economies [3], [4], [5], [13]. Although structural changes are necessary to reduce flood risk, improving situational awareness could, in the short term, enhance our ability to sense and respond to flooding, reduce flood casualties, and strengthen community resilience. Reliable situational awareness tools are especially essential considering climate-exacerbated flood risk to urban mobility [14], [15], aging or inadequate stormwater infrastructure [16], and the scale of emergency response in major urban centers (for example, first responders evacuated more than 122,300 people during Hurricane Harvey [17]). Situational awareness is defined here as the ability to timely and accurately sense flood impacts on road transportation networks at the link and network levels.

Most existing situational awareness tools for detecting flooded roads, or flooding in general, depend on a limited number of sources and consequently inherit their limitations, biases, and inaccuracies. For example, though physical sensors [18], [19], [20], [21], [22] deployed along streets can detect road conditions reliably, deploying, maintaining, and securing sensors at scale is prohibitively expensive. Similarly, although social sensors (social media platforms [23] or custom crowdsourcing tools [24], [25]) can offer enhanced situational awareness, they are often replete with bias, misinformation, noise, or model errors [26], [27], [28], [29]—thus limiting their application as the sole source of situational awareness data for emergency response applications. Further, studies [30], [31], [32], [33] have also successfully used remote sensing techniques (satellites, UAVs, and other aerial platforms) to infer road or flood conditions. While capable of observing large areas, time delays due to satellite revisit times and unavailability of aerial platforms during inclement weather conditions, such as hurricanes, limit their application for emergency response applications requiring limited time lag. With recent advances in deep learning [34], [35], automated image processing models [36], [37], [38] can infer roadway flood conditions from traffic camera images; however, camera data are often only limited to select watchpoints along major highways. Similarly, authoritative data from the Departments of Transportation [39], [40] are usually limited to major highways or arterial roads, limiting data availability for minor roads and residential streets. Recently, studies [41], [42], [43], [44] have shown successful applications of machine learning models to predict flooding and roadway status. Often trained on limited historical or simulated data, these models have unknown reliability and generalizability for unseen future events. Moreover, the data-driven models inherit biases and uncertainties associated with the training data, limiting their application. Studies [45], [46], [47], [48], [49], [50] have also used physics-based models to predict roadway conditions at select watchpoints as well as at watershed levels. While more reliable than surrogate models for unseen storms, physics-based models are computationally expensive to run in real-time, and simplifications such as the inability to model storm drainage networks could lead to model errors. Some studies have attempted to use precompiled maps [51] to overcome the computational burden of real-time models at the cost of accuracy. Similarly, studies have also attempted to correlate road conditions to nearby gages [52] or rainfall sensors [39] with varying levels of accuracy. However, such simplified or empirical methods are often insufficient for large-scale emergency response and high-risk applications. While these frameworks have advantages and work reliably for limited case study applications, they often fail to provide comprehensive mobility-centric situational awareness solutions at scale.

The shortcomings of current mobility-centric situational awareness frameworks are primarily due to limited real-time data, as they rely solely on a small number of sources. An alternative is to fuse information from multiple sources using data fusion techniques. When data from compatible sources are combined, their collective observations can overcome their individual limitations. Concurrently, data fusion also engenders the challenge of combining information from disparate sources with varying spatial and temporal resolution, reliability, robustness, and modality. Although real-time mobility-centric applications are limited, examples of data fusion-based methods are available for flood monitoring and hindcasting. For example, Wang et al. [53] used social media data with crowdsourcing data for flood monitoring. Rosser et al. [54] fused remote sensing data with social media data and topographical data for flood inundation mapping. Ahmad et al. [30] used remote sensing and social media to detect passable roads after floods. Frey et al. [55], [56], [57] used a digital elevation model and remote sensing images to identify trafficable routes. Albuquerque et al. [58] used social media and authoritative data for filtering reliable social media messages. Bischke et al. [59] used social multimedia and satellite imagery for detecting flooding. Werneck et al. [60] proposed a graph-based fusion framework for flood detection from social media images. These methods showcase the application of the data fusion approach for situational awareness or hindcasting, albeit with a very limited number of data sources. Fusing observations from limited sources (especially leveraging social or remote sensors) might not effectively provide reliable situational awareness data for emergency response applications requiring high reliability and limited time lag. In summary, a comprehensive mobility-centric situational awareness framework that can sense roadway conditions at link and network levels is still lacking in the literature. Such a framework should ideally (a) observe a majority of roads, including residential streets, with limited time lag through all stages of flooding; (b) yield reliable and accurate predictions devoid of spatial, temporal, and social bias or inequity; (c) be robust to provide reliable data even with failure of some dependent data sources; (d) quantify link- and network-level impacts on flooding to facilitate a holistic view of flooding; and (e) be accessible to a majority of communities. This study addresses this need for improved roadway sensing and proposes a mobility-centric real-time situational awareness framework leveraging data fusion.

While a data fusion approach can potentially revolutionize situational awareness, a key challenge remains unaddressed—data sources directly reporting flood road conditions are scarce. In contrast, urban centers are replete with data sources that may either directly or indirectly infer flooding or road conditions. Some common data sources include citizen service portals from the city or utility provider, water level sensors located along streams, and traffic cameras, to name a few. Often, these sources are not primarily designed for sensing flood conditions on roads, although they may provide indirect observations of flooding or flood impacts on roads. For example, live video data offers visual evidence of roadway flooding, and water level sensors provide insights on roads colocated with streams. The value of such data sources was evident during Hurricane Harvey in Houston: many people—including emergency responders—resorted to manually examining data sources to infer probable road conditions to overcome the dearth of reliable real-time road condition data [11]. While manual examination of multiple data sources provided temporary relief, they also could result in information scatter, cognitive overload, increased likelihood of misinterpretation, and the risk of using outdated data. An alternative is to leverage observations from multiple public data sources in an automated data fusion framework to sense current flood conditions. Such a framework could significantly improve situational awareness: they can enhance data availability; reduce information scatter; improve accuracy, robustness, and reliability of road condition data; and reduce the cognitive overload of first responders. Moreover, such a data fusion-centric approach might be more affordable to communities than deploying, maintaining, and securing physical sensors at scale.

This study addresses the need for reliable mobility-centric situational awareness and presents a new framework called Open Source Situational Awareness Framework for Mobility using Data Fusion (OpenSafe Fusion). OpenSafe Fusion leverages data collection and processing, data fusion and augmentation, and spatial and network analyses to infer link- and network-level impacts of flooding by fusing observations from real-time data sources that observed flooding or roadway conditions. Any new situational awareness framework should ideally address the needs of stakeholders; consequently, the design of this framework is informed by insights from extensive stakeholder interviews (n=24) and needs assessment following the tenets of a user-centered design process [61], a detailed description of which is available in Panakkal et al. [11]. This paper primarily focuses on the methodological underpinning of the OpenSafe Fusion methodology and its components. The remainder of the paper is arranged in three sections. A brief overview of the OpenSafe Fusion methodology is provided in the next section, followed by a case study application of the framework in Houston, Texas. The final section presents key insights from the experiments in the context of mobility-centric situational awareness.

Section snippets

Proposed architecture and methods

OpenSafe Fusion (Fig. 1) is a modular framework composed of five steps: data acquisition and processing, data fusion, data augmentation, impact assessment, and communication. During the data acquisition step (Fig. 1a), real-time data from select sources are acquired, processed to infer road conditions, and geolocated. During the data fusion step (Fig. 1b), road conditions inferred from the selected sources in the data acquisition step are fused at the road link level to estimate road flood

Case study evaluation

This section presents results from case study experiments designed to evaluate the OpenSafe Fusion framework for its strengths and limitations. A limited case study deployment of the framework is developed for Houston, Texas. Data sources in the study region are analyzed, and OpenSafe Fusion workflows are created. The OpenSafe Fusion framework is evaluated by reenacting Hurricane Harvey (2017). OpenSafe Fusion model predictions are compared to ground observations during enactment to quantify

Discussions and conclusions

This paper presents the methodological underpinning of the OpenSafe Fusion framework. OpenSafe Fusion addresses a key impediment to improving situational awareness – the lack of reliable real-time data on road conditions during flooding – and offers a real-time mobility-centric situational awareness framework. While additional research is required, the presented case study show that fusing multi-modal observations from existing data sources can significantly improve our ability to sense flood

CRediT authorship contribution statement

Pranavesh Panakkal: Writing – original draft, Visualization, Validation, Software, Methodology, Investigation, Formal analysis. Jamie Ellen Padgett: Writing – review & editing, Supervision, Project administration, Funding acquisition.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

The authors gratefully acknowledge the support of this research by the National Science Foundation, United States (award numbers 1951821 and 2227467) and the National Academy of Science, Engineering, and Medicine, Gulf Research Program (award number 2000013194). The authors thank Dr. Devika Subramanian for her general guidance and support in designing the image classifier. The authors also thank Allison Wyderka for sharing flood model results and data for the case study and Johnathan Roberts

Fuente: https://www.sciencedirect.com